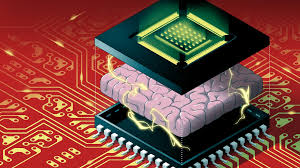

NEUROMORPHIC CHIPS

INTRODUCTION

Neuromorphic chips are advanced computing processors designed to mimic the structure and function of the human brain.

They use artificial neurons and synapses to process information in a highly efficient and parallel manner, unlike traditional digital processors.

These chips are inspired by biological neural networks and aim to enhance machine learning, artificial intelligence (AI), and real-time data processing.

WHY?

Neuromorphic chips are used because they offer efficient, brain-like computing that overcomes the limitations of traditional processors. Here’s why they are important:

- Low Power Consumption – Unlike conventional CPUs and GPUs, neuromorphic chips process data using event-driven computation, consuming significantly less energy. This makes them ideal for battery-powered devices like wearables, drones, and IoT systems.

- Faster & Parallel Processing – These chips process multiple data streams simultaneously, similar to the human brain, leading to real-time decision-making and faster AI computations.

- Adaptive & Learning Capabilities – Neuromorphic chips can learn and adapt over time without needing constant reprogramming, making them perfect for AI applications like pattern recognition, robotics, and natural language processing.

- Better Performance for AI & Edge Computing – They bring AI closer to devices, reducing reliance on cloud computing and enabling real-time, efficient AI processing in edge devices like self-driving cars, security cameras, and smart assistants.

WHAT TYPE OF TECHNOLOGIES USED?

Spiking Neural Networks (SNNs)

- SNNs are brain-inspired networks where artificial neurons communicate through spikes (short bursts of electrical signals), similar to biological neurons.

- They enable efficient, event-driven processing, reducing energy consumption compared to traditional deep learning models.

- Example: Intel’s Loihi chip uses SNNs for real-time AI learning.

Memristors (Memory Resistors)

- Memristors are electronic components that store and process data simultaneously, mimicking synapses in the brain.

- They enable faster and more energy-efficient computing by reducing the need to transfer data between memory and processing units.

- Example: HP Labs and IBM are developing memristor-based neuromorphic chips.

3D Integrated Circuits (3D ICs)

- Unlike traditional flat circuits, 3D ICs stack multiple chip layers to improve processing speed and reduce power consumption.

- This structure enhances connectivity between neurons and synapses in neuromorphic processors, allowing for better parallel processing.

- Example: IBM’s TrueNorth chip uses a 3D architecture for brain-inspired computing.

APPLICATIONS

Robotics & Autonomous Systems

- Neuromorphic chips enable real-time decision-making with low power consumption, making them ideal for robots and self-driving cars.

- Example: Self-driving cars use neuromorphic computing for real-time sensor processing and object detection.

Edge AI & IoT Devices

- Neuromorphic chips bring AI capabilities to edge devices like smart cameras, drones, and wearable tech, reducing reliance on cloud computing.

- This allows for faster, more efficient AI processing in real-time, even in low-power environments.

- Example: face recognition and anomaly detection

PROS AND CONS OF THE NEUROMORPHIC CHIPS

Pros:

- Energy Efficiency – Neuromorphic chips consume significantly less power than traditional CPUs and GPUs, making them ideal for battery-powered and edge AI applications.

- Real-Time Processing – They enable fast, parallel processing, allowing for real-time decision-making in robotics, autonomous vehicles, and IoT devices.

Cons:

- Complex Programming – Neuromorphic computing requires specialized algorithms and programming models, making it challenging to develop and integrate into existing systems.

- Limited Commercial Adoption – While promising, neuromorphic chips are still in the early stages of commercialization, with limited availability and industry-wide adoption.